案例 :探索性文本数据分析的新手教程(Amazon案例研究)

本文长度为5500字,建议阅读10+分钟

本文利用Python对Amazon产品的反馈对数据文本进行探索性研究与分析,并给出结论。

理解问题的设定

基本的文本数据预处理

用Python清洗文本数据

为探索性数据分析(EDA)准备文本数据

基于Python的Amazon产品评论探索性数据分析

https://www.kaggle.com/datafiniti/consumer-reviews-of-amazon-products

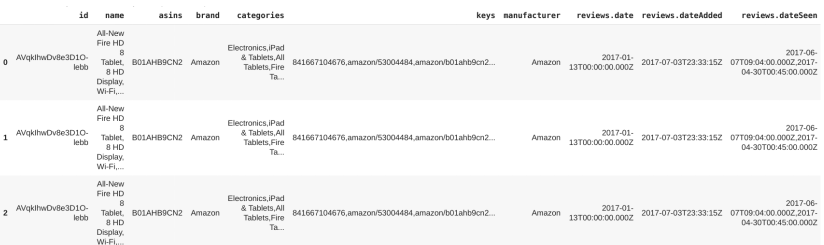

import?numpy?as?np??import?pandas?as?pd??#?可视化?import?matplotlib.pyplot?as?plt??#?正则化??import?re??#?处理字符串import?string??#?执行数学运算?import?math????#?导入数据df=pd.read_csv('dataset.csv')?print("Shape?of?data=>",df.shape)?

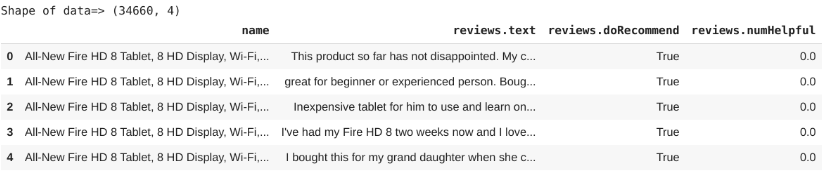

df=df[['name','reviews.text','reviews.doRecommend','reviews.numHelpful']]??print("Shape?of?data=>",df.shape)??df.head(5)?

df.isnull().sum()?

df.dropna(inplace=True)??df.isnull().sum()??

df=df.groupby('name').filter(lambda?x:len(x)>500).reset_index(drop=True)??print('Number?of?products=>',len(df['name'].unique()))??

df['reviews.doRecommend']=df['reviews.doRecommend'].astype(int)??df['reviews.numHelpful']=df['reviews.numHelpful'].astype(int)??

df['name'].unique()

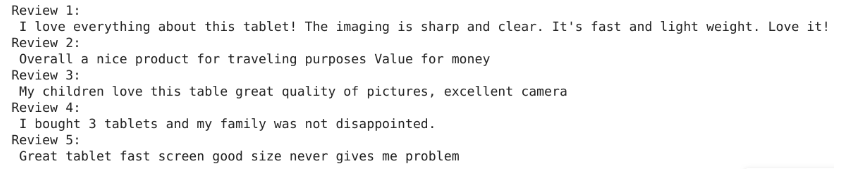

df['name']=df['name'].apply(lambda x: x.split(',,,')[0])for?index,text?in?enumerate(df['reviews.text'][35:40]):???????print('Review?%d:\n'%(index+1),text)??

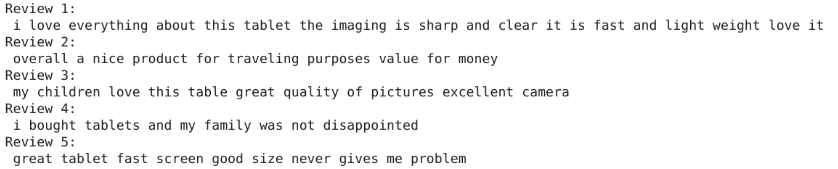

扩展缩略语;

将评论文本小写;

删除数字和包含数字的单词;

删除标点符号。

?#?Dictionary?of?English?Contractions???contractions_dict?=?{?"ain't":?"are?not","'s":"?is","aren't":?"are?not",????????????????????????"can't":?"cannot","can't've":?"cannot?have",????????????????????????"'cause":?"because","could've":?"could?have","couldn't":?"could?not",????????????????????????"couldn't've":?"could?not?have",?"didn't":?"did?not","doesn't":?"does?not",????????????????????????"don't":?"do?not","hadn't":?"had?not","hadn't've":?"had?not?have",????????????????????????"hasn't":?"has?not","haven't":?"have?not","he'd":?"he?would",????????????????????????"he'd've":?"he?would?have","he'll":?"he?will",?"he'll've":?"he?will?have",????????????????????????"how'd":?"how?did","how'd'y":?"how?do?you","how'll":?"how?will",????????????????????????"I'd":?"I?would",?"I'd've":?"I?would?have","I'll":?"I?will",?????????????????????"I'll've":?"I?will?have","I'm":?"I?am","I've":?"I?have",?"isn't":?"is?not",????????????????????????"it'd":?"it?would","it'd've":?"it?would?have","it'll":?"it?will",????????????????????????"it'll've":?"it?will?have",?"let's":?"let?us","ma'am":?"madam",????????????????????????"mayn't":?"may?not","might've":?"might?have","mightn't":?"might?not",????????????????????????"mightn't've":?"might?not?have","must've":?"must?have","mustn't":?"must?not",????????????????????????"mustn't've":?"must?not?have",?"needn't":?"need?not",????????????????????????"needn't've":?"need?not?have","o'clock":?"of?the?clock","oughtn't":?"ought?not",????????????????????????"oughtn't've":?"ought?not?have","shan't":?"shall?not","sha'n't":?"shall?not",????????????????????????"shan't've":?"shall?not?have","she'd":?"she?would","she'd've":?"she?would?have",????????????????????????"she'll":?"she?will",?"she'll've":?"she?will?have","should've":?"should?have",????????????????????????"shouldn't":?"should?not",?"shouldn't've":?"should?not?have","so've":?"so?have",????????????????????????"that'd":?"that?would","that'd've":?"that?would?have",?"there'd":?"there?would",????????????????????????"there'd've":?"there?would?have",?"they'd":?"they?would",????????????????????????"they'd've":?"they?would?have","they'll":?"they?will",????????????????????????"they'll've":?"they?will?have",?"they're":?"they?are","they've":?"they?have",??"to've": "to have","wasn't": "was not","we'd": "we would",??????????????????????"we'd've":?"we?would?have","we'll":?"we?will","we'll've":?"we?will?have",??"we're": "we are","we've": "we have", "weren't": "were not","what'll": "what will","what'll've": "what will have","what're": "what are", "what've": "what have","when've": "when have","where'd": "where did", "where've": "where have",??????????????????????"who'll":?"who?will","who'll've":?"who?will?have","who've":?"who?have",??"why've": "why have","will've": "will have","won't": "will not",??????????????????????"won't've":?"will?not?have",?"would've":?"would?have","wouldn't":?"would?not",????????????????????????"wouldn't've":?"would?not?have","y'all":?"you?all",?"y'all'd":?"you?all?would",????????????????????????"y'all'd've":?"you?all?would?have","y'all're":?"you?all?are",????????????????????????"y'all've":?"you?all?have",?"you'd":?"you?would","you'd've":?"you?would?have",????????????????????????"you'll":?"you?will","you'll've":?"you?will?have",?"you're":?"you?are",????????????????????????"you've":?"you?have"}??????#?Regular?expression?for?finding?contractions???contractions_re=re.compile('(%s)'?%?'|'.join(contractions_dict.keys()))??????#?Function?for?expanding?contractions???def?expand_contractions(text,contractions_dict=contractions_dict):?????def?replace(match):???????return?contractions_dict[match.group(0)]?????return?contractions_re.sub(replace,?text)??????#?Expanding?Contractions?in?the?reviews???df['reviews.text']=df['reviews.text'].apply(lambda?x:expand_contractions(x))??

df['cleaned']=df['reviews.text'].apply(lambda x: x.lower())df['cleaned']=df['cleaned'].apply(lambda x: re.sub('\w*\d\w*','', x))df['cleaned']=df['cleaned'].apply(lambda x: re.sub('[%s]' % re.escape(string.punctuation), '', x))?#?Removing?extra?spaces???df['cleaned']=df['cleaned'].apply(lambda?x:?re.sub('?+','?',x))??

?for?index,text?in?enumerate(df['cleaned'][35:40]):???????print('Review?%d:\n'%(index+1),text)??

删除停用词;

词形还原;

创建文档术语矩阵。

?#?Importing?spacy???import?spacy??????#?Loading?model???nlp?=?spacy.load('en_core_web_sm',disable=['parser',?'ner'])?????# Lemmatization with stopwords removal?df['lemmatized']=df['cleaned'].apply(lambda?x:?'?'.join([token.lemma_?for?token?in?list(nlp(x))?if?(token.is_stop==False)]))??

df_grouped=df[['name','lemmatized']].groupby(by='name').agg(lambda?x:'?'.join(x))??df_grouped.head()??

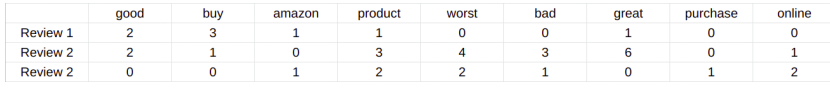

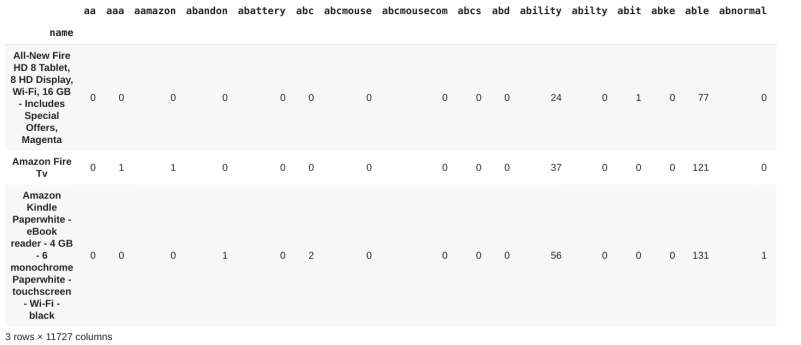

# Creating Document Term Matrixfrom sklearn.feature_extraction.text?import?CountVectorizer?cv=CountVectorizer(analyzer='word')???data=cv.fit_transform(df_grouped['lemmatized'])???df_dtm?=?pd.DataFrame(data.toarray(),?columns=cv.get_feature_names())???df_dtm.index=df_grouped.index???df_dtm.head(3)??

# Importing wordcloud for plotting word clouds and textwrap for wrapping longer textfrom wordcloud import WordCloudfrom textwrap import wrap#?Function?for?generating?word?clouds??def generate_wordcloud(data,title):wc = WordCloud(width=400, height=330, max_words=150,colormap="Dark2").generate_from_frequencies(data)??plt.figure(figsize=(10,8))????plt.imshow(wc,?interpolation='bilinear')??plt.axis("off")plt.title('\n'.join(wrap(title,60)),fontsize=13)??plt.show()??#?Transposing?document?term?matrix??df_dtm=df_dtm.transpose()??#?Plotting?word?cloud?for?each?product??for?index,product?in?enumerate(df_dtm.columns):??generate_wordcloud(df_dtm[product].sort_values(ascending=False),product)

?from?textblob?import?TextBlob???df['polarity']=df['lemmatized'].apply(lambda?x:TextBlob(x).sentiment.polarity)?

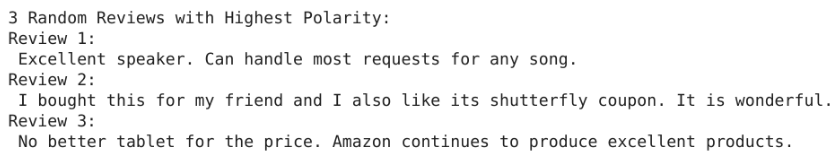

print("3?Random?Reviews?with?Highest?Polarity:")??for?index,review?in?enumerate(df.iloc[df['polarity'].sort_values(ascending=False)[:3].index]['reviews.text']):?????print('Review?{}:\n'.format(index+1),review)?

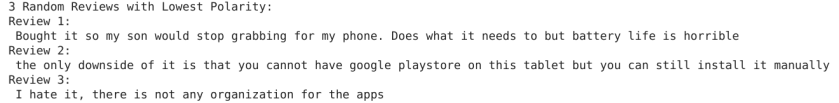

print("3 Random Reviews with Lowest Polarity:")for index,review in enumerate(df.iloc[df['polarity'].sort_values(ascending=True)[:3].index]['reviews.text']):print('Review {}:\n'.format(index+1),review)

?product_polarity_sorted=pd.DataFrame(df.groupby('name')['polarity'].mean().sort_values(ascending=True))??????plt.figure(figsize=(16,8))???plt.xlabel('Polarity')???plt.ylabel('Products')???plt.title('Polarity?of?Different?Amazon?Product?Reviews')??polarity_graph=plt.barh(np.arange(len(product_polarity_sorted.index)),product_polarity_sorted['polarity'],color='purple',)# Writing product names on barfor bar,product in zip(polarity_graph,product_polarity_sorted.index):plt.text(0.005,bar.get_y()+bar.get_width(),'{}'.format(product),va='center',fontsize=11,color='white')# Writing polarity values on graphfor bar,polarity in zip(polarity_graph,product_polarity_sorted['polarity']):plt.text(bar.get_width()+0.001,bar.get_y()+bar.get_width(),'%.3f'%polarity,va='center',fontsize=11,color='black')plt.yticks([])plt.show()

?recommend_percentage=pd.DataFrame(((df.groupby('name')['reviews.doRecommend'].sum()*100)/df.groupby('name')['reviews.doRecommend'].count()).sort_values(ascending=True))??????plt.figure(figsize=(16,8))???plt.xlabel('Recommend?Percentage')???plt.ylabel('Products')???plt.title('Percentage?of?reviewers?recommended?a?product')??recommend_graph=plt.barh(np.arange(len(recommend_percentage.index)),recommend_percentage['reviews.doRecommend'],color='green')# Writing product names on barfor bar,product in zip(recommend_graph,recommend_percentage.index):plt.text(0.5,bar.get_y()+0.4,'{}'.format(product),va='center',fontsize=11,color='white')?#?Writing?recommendation?percentage?on?graph??for bar,percentage in zip(recommend_graph,recommend_percentage['reviews.doRecommend']):plt.text(bar.get_width()+0.5,bar.get_y()+0.4,'%.2f'%percentage,va='center',fontsize=11,color='black')????plt.yticks([])???plt.show()??

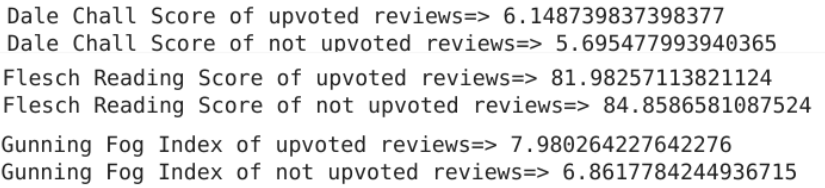

?recommend_percentage=pd.DataFrame(((df.groupby('name')['reviews.doRecommend'].sum()*100)/df.groupby('name')['reviews.doRecommend'].count()).sort_values(ascending=True))???import?textstat???df['dale_chall_score']=df['reviews.text'].apply(lambda?x:?textstat.dale_chall_readability_score(x))???df['flesh_reading_ease']=df['reviews.text'].apply(lambda?x:?textstat.flesch_reading_ease(x))???df['gunning_fog']=df['reviews.text'].apply(lambda?x:?textstat.gunning_fog(x))??????print('Dale?Chall?Score?of?upvoted?reviews=>',df[df['reviews.numHelpful']>1]['dale_chall_score'].mean())???print('Dale?Chall?Score?of?not?upvoted?reviews=>',df[df['reviews.numHelpful']<=1]['dale_chall_score'].mean())??????print('Flesch?Reading?Score?of?upvoted?reviews=>',df[df['reviews.numHelpful']>1]['flesh_reading_ease'].mean())???print('Flesch?Reading?Score?of?not?upvoted?reviews=>',df[df['reviews.numHelpful']<=1]['flesh_reading_ease'].mean())??????print('Gunning?Fog?Index?of?upvoted?reviews=>',df[df['reviews.numHelpful']>1]['gunning_fog'].mean())???print('Gunning?Fog?Index?of?not?upvoted?reviews=>',df[df['reviews.numHelpful']<=1]['gunning_fog'].mean())??

?df['text_standard']=df['reviews.text'].apply(lambda?x:?textstat.text_standard(x))??????print('Text?Standard?of?upvoted?reviews=>',df[df['reviews.numHelpful']>1]['text_standard'].mode())???print('Text?Standard?of?not?upvoted?reviews=>',df[df['reviews.numHelpful']<=1]['text_standard'].mode())??

?df['reading_time']=df['reviews.text'].apply(lambda?x:?textstat.reading_time(x))??????print('Reading?Time?of?upvoted?reviews=>',df[df['reviews.numHelpful']>1]['reading_time'].mean())???print('Reading?Time?of?not?upvoted?reviews=>',df[df['reviews.numHelpful']<=1]['reading_time'].mean())??

顾客喜欢亚马逊的产品。它们令人满意同时易于使用;

亚马逊需要提升 Fire Kids Edition Tablet 这款产品,因为它的负面评论最多。它也是最不被推荐的产品;

大部分的评论都是用简单的英语写的,任何一个五六年级水平的人都很容易理解;

有用的评论的阅读时间是非有用评论的两倍,这意味着人们发现较长的评论更有帮助。

译者简介:李嘉骐,清华大学自动化系博士在读,本科毕业于北京航空航天大学自动化学院,研究方向为生物信息学。对数据分析和数据可视化有浓厚兴趣,喜欢运动和音乐。

「完」

关注公众号:拾黑(shiheibook)了解更多

[广告]赞助链接:

四季很好,只要有你,文娱排行榜:https://www.yaopaiming.com/

让资讯触达的更精准有趣:https://www.0xu.cn/

关注网络尖刀微信公众号

关注网络尖刀微信公众号随时掌握互联网精彩

赞助链接

排名

热点

搜索指数

- 1 中共中央召开党外人士座谈会 7904356

- 2 日本附近海域发生7.5级地震 7809010

- 3 日本发布警报:预计将出现最高3米海啸 7713362

- 4 全国首艘氢电拖轮作业亮点多 7617454

- 5 课本上明太祖画像换了 7522839

- 6 中国游客遇日本地震:连滚带爬躲厕所 7425940

- 7 亚洲最大“清道夫”落户中国洋浦港 7329345

- 8 日本地震当地居民拍下自家书柜倒塌 7233469

- 9 银行网点正消失:今年超9000家关停 7141392

- 10 “人造太阳”何以照进现实 7044816

数据分析

数据分析